EU Strikes World's First Sweeping AI Regulation Deal

© Photo : Pixabay

Subscribe

The AI industry has been locked in a heated arms race over the past few years, with billions poured into the field. However, there have been concerns voiced by governments and the public over everything from economic impact – such as AI taking over jobs – to fears of misinformation and privacy violations.

The European Union prided itself on being the first to hammer out a sweeping deal on comprehensive regulation of artificial intelligence (AI) on Friday.

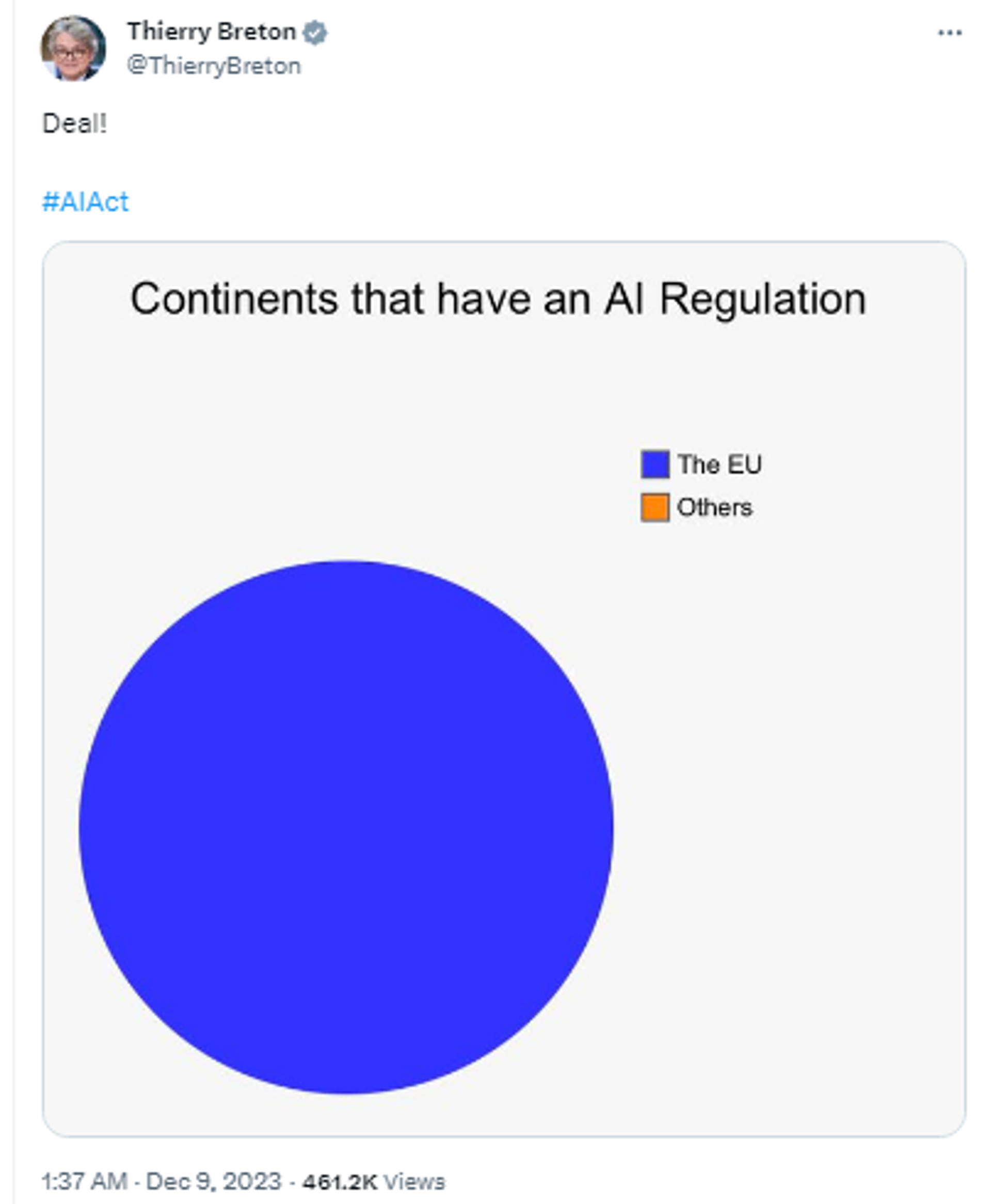

Thierry Breton, commissioner for internal market of the European Union, went on X social media platform to hail the A.I. Act as encouraging innovation, harnessing potential benefits of the rapidly-advancing technology, while mitigating possible risks. EU lawmakers spent a lot of time seeking “the right balance between making the most of AI potential to support law enforcement while protecting our citizens’ fundamental rights,” he said in his X post, adding: “We do not want any mass surveillance in Europe.”

Screenshot of X post by Thierry Breton, Commissioner for Internal Market of the European Union.

© Photo : ThierryBreton/X

Marathon talks spanning 37 hours and involving delegates from the European Commission, European Parliament, and 27 member countries were needed to come up with "controls" for generative AI tools such as those employed by ChatGPT, a popular chatbot by OpenAI, and Google’s answer to it - Bard. The reason why it took so long was the need for a careful balancing act. While trying to address safety and privacy concerns, there were fears of overregulation to prevent hamstringing new European AI start-ups in the race with the US' OpenAI and Google, per reports.

So, what is in the new provisional AI regulation deal? It will ban:

Use of real-time surveillance and biometric technologies. Exceptions will allow police to use the technologies in the event of an unexpected threat of a terrorist attack, a need to search for victims, and in the prosecution of serious crime;

Untargeted extraction of facial images from the Internet or CCTV footage to establish facial recognition databases;

Emotion recognition in the workplace and educational institutions;

Social scoring - determining an individual's social behavior - based on social performance or personal characteristics;

AI systems able to manipulate human behavior to circumvent their free will;

AI used to exploit people’s vulnerabilities based on age, disability, etc.

Special obligations, such as "fundamental rights impact assessments," are set out for AI "high-risk systems," which could potentially cause harm to health, safety, fundamental rights, environment, democracy, and the rule of law. This risk category is defined by the number of computer transactions needed to train the machine, or “floating point operations per second” (Flops).

Additional guardrails presuppose that general-purpose AI (GPAI) systems, and GPAI models they are based on, will adhere to “transparency requirements.” Specifically, this will require detailed summaries and documentation about the content used for training the afore-said AI systems.

Those who fail to comply with these regulations would be facing fines ranging from €35 million, or 7% of global turnover, to €7.5 million, or 1.5% of turnover, depending on the size of the company. The EU first began working on draft measures to bring oversight to tech in 2018, and finally these evolved into the AI Act. Now, formal approval by EU member states and the parliament is the next step for the provisional legislation.

As the AI race has revved up, with OpenAI’s ChatGPT (Chat Generative Pre-trained Transformer) launched in November 2022 already boasting over 100 million users, concerns and even fears have spiraled. Thus, cyber security experts warned that voice cloning software and "deepfake technologies" could be used to circumvent biometric security measures, or to spread misinformation. Privacy experts have been up in arms, alarmed at the possibility of AI-powered facial recognition software being used to gather information online. Still other concerns were linked with how AI programs like chatbots and image-generation software were built on people's intellectual property. Of course, there have also been economic concerns. One report from Goldman Sachs claimed AI could potentially replace work hours equivalent to 300 million full-time jobs.